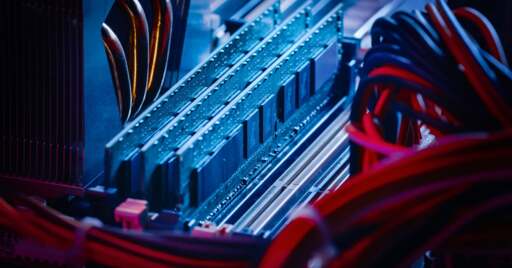

But how can I get anything done with these meager 128 GB computers?

Dudes with animated anime girl wallpapers and 13 blur effects on the desktop that complain about software bloat kill me.

The only software that matters.

It’s not running out. It’s being hoarded for the entropy machine.

Edit: anyone know if entropy machine ram can be salvaged for human use? If they use the same sticks?

Yes but you’ll need special hardware. Enterprise systems use registered “RDIMM” modules that won’t work in consumer systems. Even if your system supports ECC that is just UDIMM aka consumer grade with error correction.

This all being said I would bet you could find some cheap Epic or Xeon chips + an appropriate board if/when they crash comes.

I could use some extra memory. Just jam it into my head I’m sure it’ll work

Server memory is probably reusable, though likely to be either soldered and/or ECC modules. But a soldering iron and someone sufficiently smart can probably do it (if it isn’t directly usable).

So it’s salvageable if they don’t burn it out running everything at 500c

500°C would be way above the safe operating temps, but most likely yes.

You think the slop cultists care?

Just glad I invested in 64GBs when it only cost $200. Same ram today is nearly $700.

it might be time for me to learn GPUI, i wonder if it’s any good.

I was impressed with GPUI’s description of how they render text, and hope that it either grows into a general purpose GUI toolkit, or inspires one with a similar approach. It has a long way to go, though.

You might find this interesting:

https://github.com/longbridge/gpui-componentIn the meantime, Qt is still the only cross-platform desktop toolkit that does a convincing job of native look and feel. If you’re not married to Rust, you might have a look at these new Qt bindings for Go and Zig:

https://github.com/mappu/miqt

https://github.com/rcalixte/libqt6zig

The tradeoff always was to use higher level languages to increase development velocity, and then pay for it with larger and faster machines. Moore’s law made it where the software engineer’s time and labor was the expensive thing.

Moore’s law has been dying for a decade, if not more, and as a result of this I am definitely seeing people focus more on languages that are closer to hardware. My concern is that management will, like always, not accept the tradeoffs that performance oriented languages sometimes require and will still expect incredible levels of productivity from developers. Especially with all of nonsense around using LLMs to “increase code writing speed”

I still use Python very heavily, but have been investigating Zig on the side (Rust didn’t really scratch my itch) and I love the speed and performance, but you are absolutely taking a tradeoff when it comes to productivity. Things take longer to develop but once you finish developing it the performance is incredible.

I just don’t think the industry is prepared to take the productivity hit, and they’re fooling themselves, thinking there isn’t a tradeoff.

TUI enthusiasts: “I’ve trained for this day.”

P.S. Yes, I know a TUI program can still be bloated.

Yeah, gonna be interesting. Software companies working on consumer software often don’t need to care, because:

- They don’t need to buy the RAM that they’re filling up.

- They’re not the only culprit on your PC.

- Consumers don’t understand how RAM works nearly as well as they understand fuel.

- And even when consumers understand that an application is using too much, they may not be able to switch to an alternative either way, see for example the many chat applications written in Electron, none of which are interoperable.

I can see somewhat of a shift happening for software that companies develop for themselves, though. At $DAYJOB, we have an application written in Rust and you can practically see the dollar signs lighting up in the eyes of management when you tell them “just get the cheapest device to run it on” and “it’s hardly going to incur cloud hosting costs”.

Obviously this alone rarely leads to management deciding to rewrite an application/service in a more efficient language, but it certainly makes them more open to devs wanting to use these languages. Well, and who knows what happens, if the prices for Raspberry Pis and cloud hosting and such end up skyrocketing similarly.As a programmer myself I don’t care about RAM usage, just startup time. If it takes 10s to load 150MB into memory it’s a good case for putting in the work to reduce the RAM bloat.

As a programmer myself I don’t care about RAM usage,

But you repeat yourself.

Add to the list: doing native development most often means doing it twice. Native apps are better in pretty much every metric, but rarely are they so much better that management decides it’s worth doing the same work multiple times.

If you do native, you usually need a web version, Android, iOS, and if you are lucky you can develop Windows/Linux/Mac only once and only have to take the variation between them into account.

Do the same in Electon and a single reactive web version works for everything. It’s hard to justify multiple app development teams if a single one suffices too.

iOS

At my last job we had a stretch where we were maintaining four different iOS versions of our software: different versions for iPhones and iPads, and for each of those one version in Objective-C and one in Swift. If anyone thinks “wow, that was totally unnecessary”, that should have been the name of my company.

“works” is a strong word

Works good enough for all that a manager cares about.

At this rate I suspect the best solution is to cram everything but the UI into a cross-platform library (written in, say, Rust) and have the UI code platform-specific, use your cross-platform library using FFI. If you’re big enough to do that, at least.

but the UI into a cross-platform library (written in, say, Rust)

Many have tried, none have succeeded. You can go allllll the way back to Java’s SWING, as well as Qt. This isn’t something that “just do it in Rust” is going to succeed at.

Or just use rust for everything with Dioxus. At least, that’s what Dioxus is going for.

Are we gui yet?

I haven’t really kept up with Rust UI frameworks (or Rust at all lately, nearly nobody wants to pay me to write Rust, they keep paying me to write everything else). Iced was the most well-known framework last I tried any UI work, with Tauri being a promising alternative (that still requires web tech unfortunately). This was just me playing around on the desktop.

Is Dioxus easy to get started with? I have like no mobile UI experience, and pretty much no UI experience in general. I prefer doing backend development. Buuuut there’s an app I want to build and I really want it to be available for both iOS and Android… And while iOS development doesn’t seem too horrible for me, Android has always been weird to me

Is Dioxus easy to get started with?

I haven’t tried it myself but I’ve read the tutorials and it looks very React-inspired. It looks quite easy to pick up. It is still based on HTML and CSS but you can use one code base for all platforms.

This equation might change a bit as more software users learn how bloated apps affect their hardware upgrade frequency & costs over time. The RAM drought brings new incentive to teach and act on that knowledge.

Management might be a bit easier to convince when made to realize that efficiency translates to more customers, while bloat translates to fewer. In some cases, developing a native app might even mean gaining traction in a new market.

I’m not too optimistic on that one. Bloated software has been an issue for the last 20 or so years at least.

At the same time upgrade cycles have become much slower. In the 90s you’d upgrade your PC every two years and each upgrade would bring whole entire use cases that just weren’t possible before. Similar story with smartphones until the mid-2010s.

Nowadays people use their PCs for upwards of 10 years and their smartphones until they drop them and crack the screen.

Devices have so much performance nowadays that you can really just run some electron apps and not worry about it. It might lag a little at times, but nobody buys a new device just because the loyalty app of your local supermarket is laggy.

I don’t like Electron either, but tbh, most apps running on Electon are so light-weight that it doesn’t matter much that they waste 10x the performance. If your device can handle a browser with 100 tabs, there’s no issue running an Electron app either.

Lastly, most Electron/Webview apps aren’t really a matter of choice. If your company uses Teams you will use teams, no matter how shit it runs on your device. If you need to use your public transport, you will use their app, no matter if it’s Electron or not. Same with your bank, your mobile phone carrier or any other service.

We have an enormous problem with software optimization both in cycles and memory costs. I would love for that to change but the vast majority of customers don’t care. It’s painful to think about but most don’t care as long as it works “good enough” which is a nebulous measure that management can use to lie to shareholders.

Even mentioning that we’ve wiped out roughly a decade in hardware gains with how bloated and slow our software is doesn’t move the needle. All of the younger devs in our teams truly see no issue. They consider nextjs apps to be instant. Their term, not me putting words in their mouths. VSCode is blazingly fast in their eyes.

We’ve let the problem slide so long that we have a whole generation of upcoming devs that don’t even see a problem let alone care about it. Anyone who mentors devs should really hammer this home and maybe together we can all start shifting that apathy.

many chat applications written in Electron, none of which are interoperable.

This is one of my pet peeves, and a noteworthy example because chat applications tend to be left running all day long in order to notify of new messages, reducing a system’s available RAM at all times. Bloated ones end up pushing users into upgrading their hardware sooner than should be needed, which is expensive, wasteful, and harmful to the environment.

Open chat services that support third party clients have an advantage here, since someone can develop a lightweight one, or even a featherweight message notifier (so that no full-featured client has to run all day long).

Open chat is good in theory but corporate overlords need control. We can ignore them, but that is a lot of laptops with a lot of memory

Writing in Rust or “an efficient language” does nothing for ram bloat. The problem is using 3rd party libraries and frameworks. For example a JavaScript interpreter uses around 400k. The JavaScript problem is developers importing a 1GB library to compare a string.

You’d have the same bloat if you wrote in assembly.

Maybe you’re confusing memory (RAM) vs storage ? Because I converted some backend processing services from nodejs to rust, and it’s almost laughable how little RAM the rust counterparts used.

Just running a nodejs service took a couple of hundred mb of ram, iirc. While the rust services could run at below 10mb.

But I’m guessing that if you went the route of compiling an actual binary from the nodejs service, you could achieve some saving of ram & storage either way. With bun or deno ?

Because I converted some backend processing services from nodejs to rust,

You converted only the functions you needed and only included the functions you needed. You did not convert the entire node.js codebase and then include the entire library. That’s the problem I’m describing. A few years ago I toyed with javascript to make a LCARS style wall home automation panel. The overhead of what other people had published was absurd. I did what you did. I took out only the functions I needed, rewrote them, and reduced my program from gigabytes to megabytes even though it was still all Javascript.

Yeah, you need to do tree-shaking with JavaScript to get rid of unused library code: https://developer.mozilla.org/en-US/docs/Glossary/Tree_shaking

I would expect larger corporate projects to do so. It is something that one needs to know about and configure, but if one senior webdev works on a project, they’ll set it up pretty quickly.

On the one hand, tree shaking is often not used, even in large corporate projects.

On the other hand, tree shaking is much less effective than what a good compiler does. Tree shaking only works on a per-module basis, while compilers can optimize down to a code-line basis. Unused functions are not included, and not even variables that can be optimized out are included.

But the biggest issue (and one that tree shaking can also not really help against) is that due to the weak standard library of JS a ton of very simple things are implemented in lots of different ways. It’s not uncommon for a decently sized project (including all of the dependencies) to contain a dozen or so implementations of a padding function or some other small helper functions.

And since all of them are used somewhere in the dependency tree, none of them can be optimized out.

That’s not really a problem of the runtime or the language itself, but since the language and its environment are quite tightly coupled, it is a big problem when developing on JS.

“Mature ecosystem” it’s called in JS land.

I wish nodejs or ecmascript would have just done the Go thing and included a legit standard library.

Tree-shaking works on per function basis

This isn’t Reddit. You don’t need to talk in absolutes.

Similar to WittyShizard, my experience is very different. Said Rust application uses 1200 dependencies and I think around 50 MB RAM. We had a Kotlin application beforehand, which used around 300 dependencies and 1 GB RAM, I believe. I would expect a JavaScript application of similar complexity to use a similar amount or more RAM.

And more efficient languages do have an effect on RAM usage, for example:

- Not using garbage collection means objects generally get cleared from RAM quicker.

- Iterating over substrings or list elements is likely to be implement more efficiently, for example Rust has string slices and explicit

.iter()+.collect(). - People in the ecosystem will want to use the language for use-cases where efficiency is important and then help optimize libraries.

- You’ve even got stupid shit, for example in garbage-collected languages, it has traditionally been considered best practice, that if you’re doing async, you should use immutable data types and then always create a copy of them when you want to update them. That uses a ton of RAM for stupid reasons.

This isn’t Reddit. You don’t need to talk in absolutes.

I haven’t posted anything on reddit in years. There is no need to start off a post with insults.

re: garbage collection

I wrote java back in 1997 and the programs used a few megabytes. Garbage collection doesn’t in itself require significantly more ram because it only delays the freeing of ram that would have been allocated using a non garbage collection language. Syntatic sugar like iterators does not in general save gigabytes of ram.

The OP isn’t talking about 500k apps now requiring 1MB. The article talks about former 85K apps now taking GB’s of ram.

I don’t know what part of that is supposed to be an insult.

And the article may have talked of such stark differences, but I didn’t. I’m just saying that the resource usage is noticeably lower.

640k ought to be enough for anybody

Relevant community: !sustainabletech@lemmy.sdf.org

Hah, wishful thinking

that was always an option… but people don’t know how to program for efficiency because “ram is cheap” was ALWAYS the answer to everything

I think you nailed it there. Now that ram is no longer cheap, devs better start learning.

It’s not even that they didn’t know how to program for efficiency but that they chose the most inefficient tools possible to share web browser and app code

Everyone better start learning Rust.

.clone() everything!

I do kind of agree in a way though. Rust forces you to think a bit about memory and the language does tend to guide towards good design. But it’s not magic and it’s easy to write inefficient Rust too. Especially if you just clone everything. But I personally find Rust to be a good mix of low level control that feels sufficiently high level.

Garbage collected languages can be memory efficient too though. Having easily shared references is great!

After you’ve cloned everything you’ll

Arc<Mutex<>>everything.

Rust programs can definitely still consume a lot of memory. Not using a garbage collector certainly helps with memory usage, but it’s not going to change it from gigabytes to kilobytes. That requires completely rethinking how things are done.

That said I’m very much in favour of everyone learning Rust, as it’s a great language - but for other reasons than memory usage :)

True, but memory will be freed in a more timely manner and memory leaks probably won’t happen.

I’m not sure what there’s less excuse for, the software bloat or the memory running out.